Another continuation of my main blog post and working through my 3 use cases, this one happens to be something a lot of people have been asking me about. 2 node vSAN edge site with a remote witness, but what if the remote witness was in the same room and running on a Raspberry Pi. Here’s where I’ll test that option.

Let’s set this up a bit, I’ll be using two virtual ESXi hosts again using William Lam’s virtual ESX content library and deploying two virtual ESXi servers to setup and configure a 2-node vSAN cluster. I’ll use the VMware Fling again running on a Raspberry Pi4 to act as my vSAN witness.

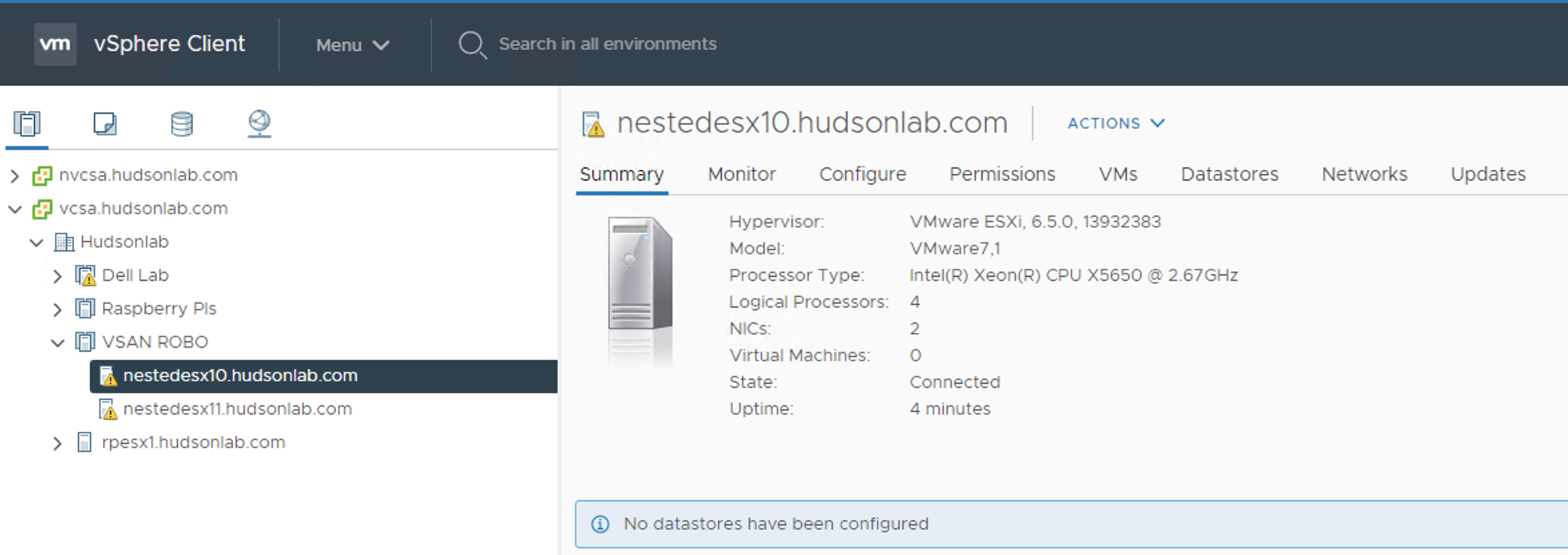

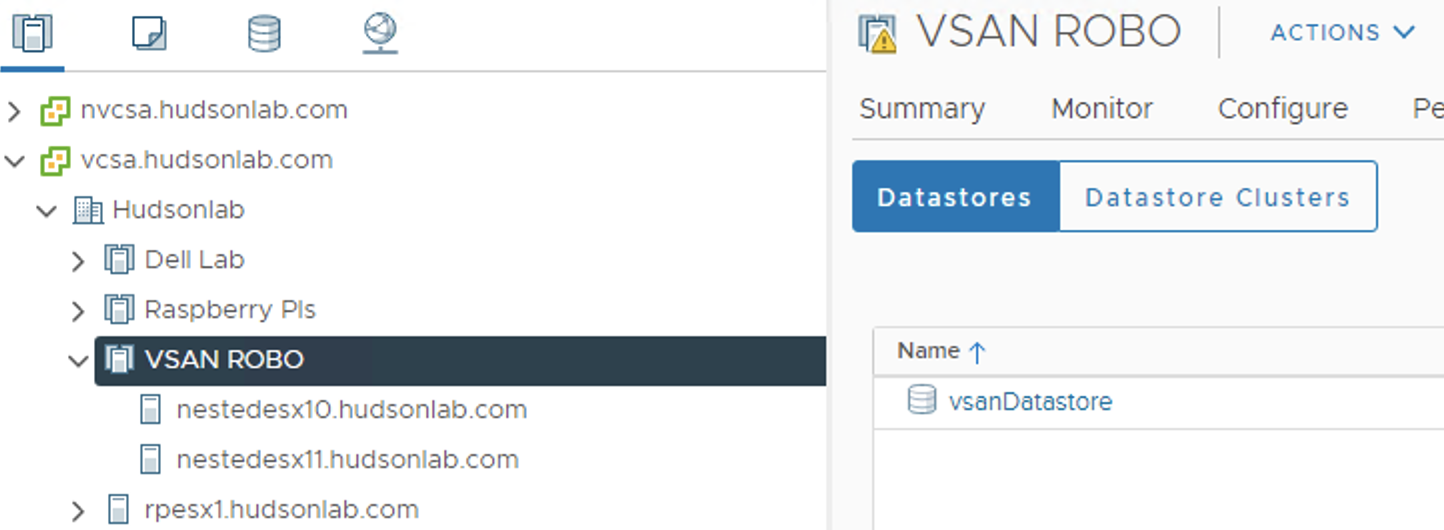

Here’s my cluster setup, a cluster called vSAN ROBO with two ESXi hosts running nested and as you can see, they have the error “No datastores have been configured”. Also in the view below is the single raspberry pi4 running the ESXiOnArm fling that I’ll be using as my remote witness. If you need instructions on how I setup the RP4 with ESXi and configured for vSAN then review the main blog post above and the other blog on how I setup a 2-node vSAN cluster on RP4s running ESXi here.

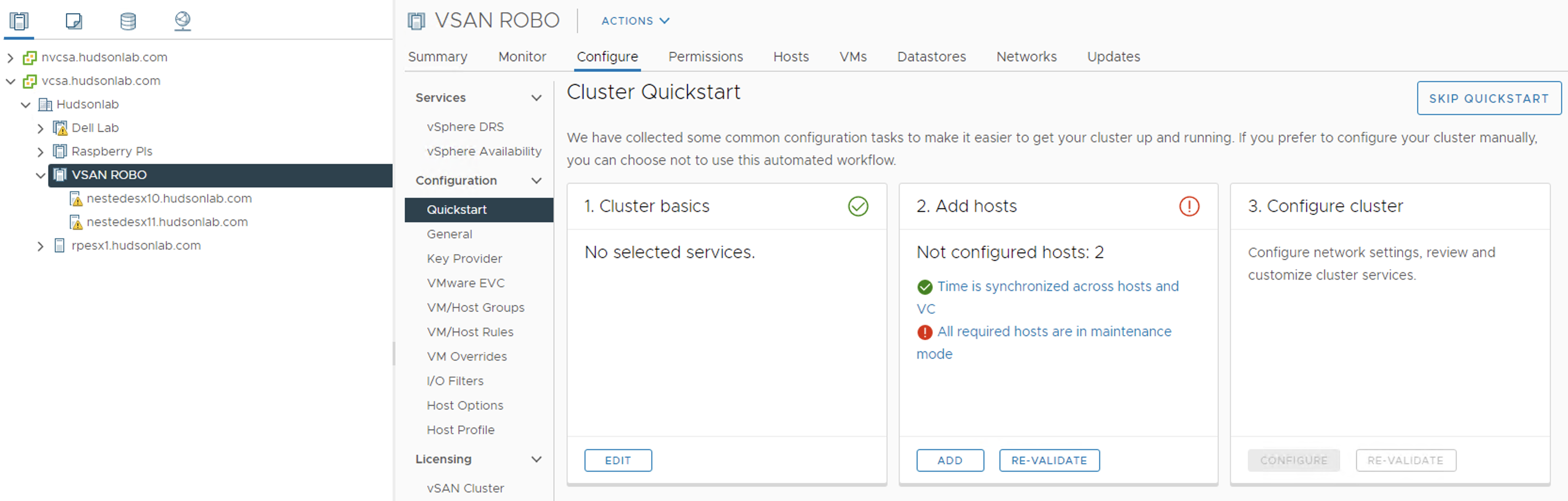

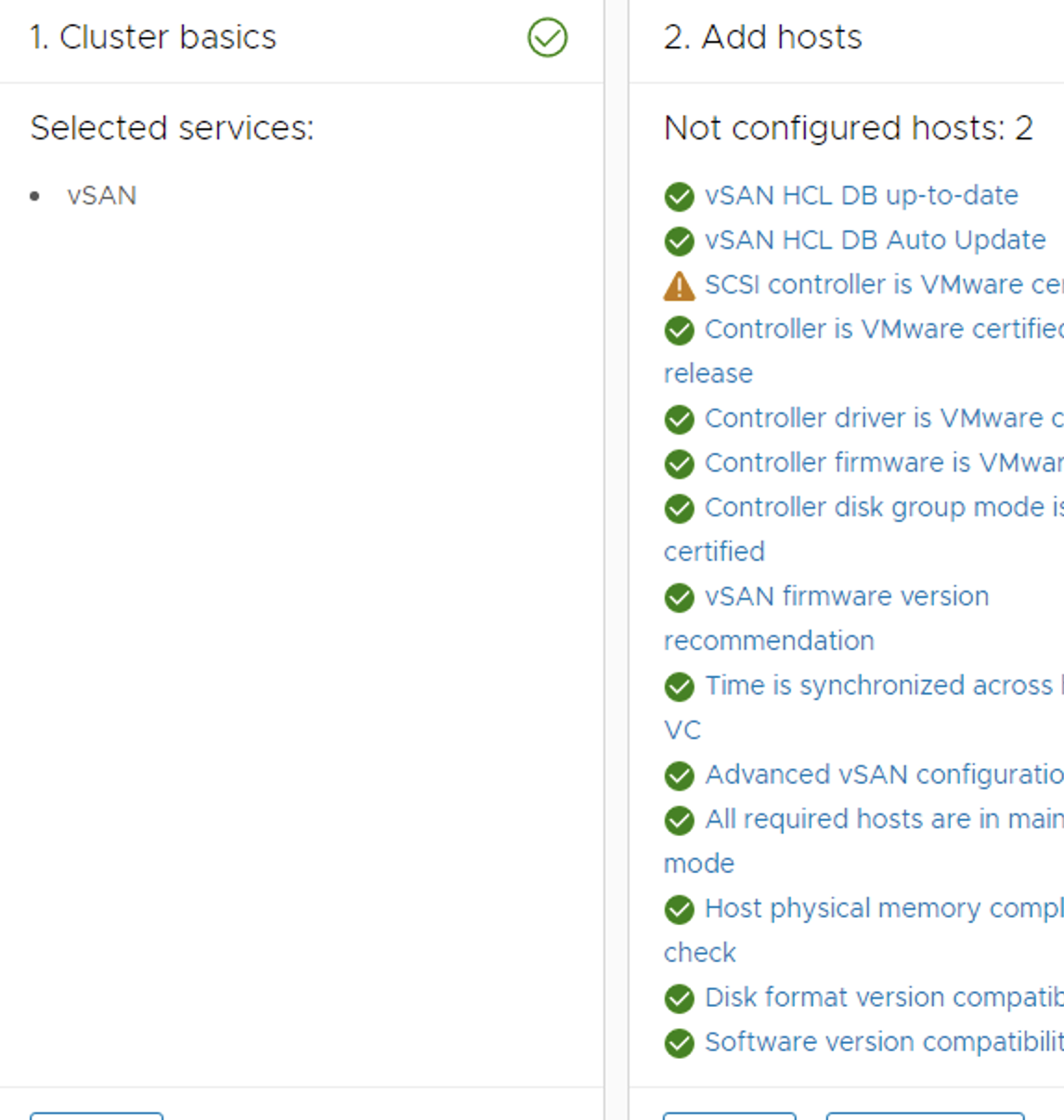

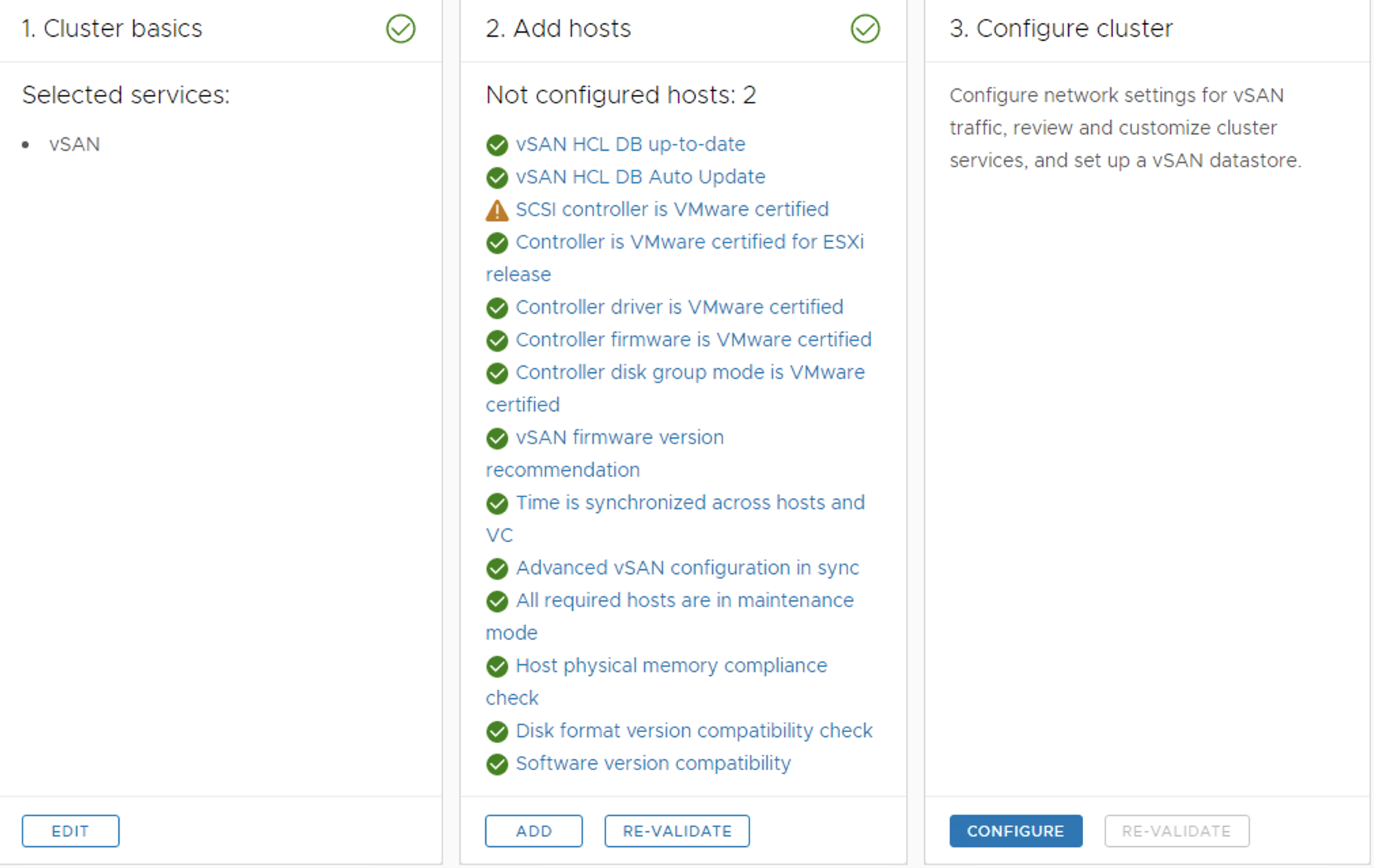

I’ll use the Cluster Quick start option to configure the 2-node vSAN ROBO setup after placing both nested hosts into maintenance mode. Step 1 cluster basics I’m only going to turn on vSAN services.

After hosts have been placed into maintenance mode and adding vSAN services, step 2 of the Add Hosts has already ran the pre-checks for me, and this point I’m going to walk through step 3 and configure the cluster.

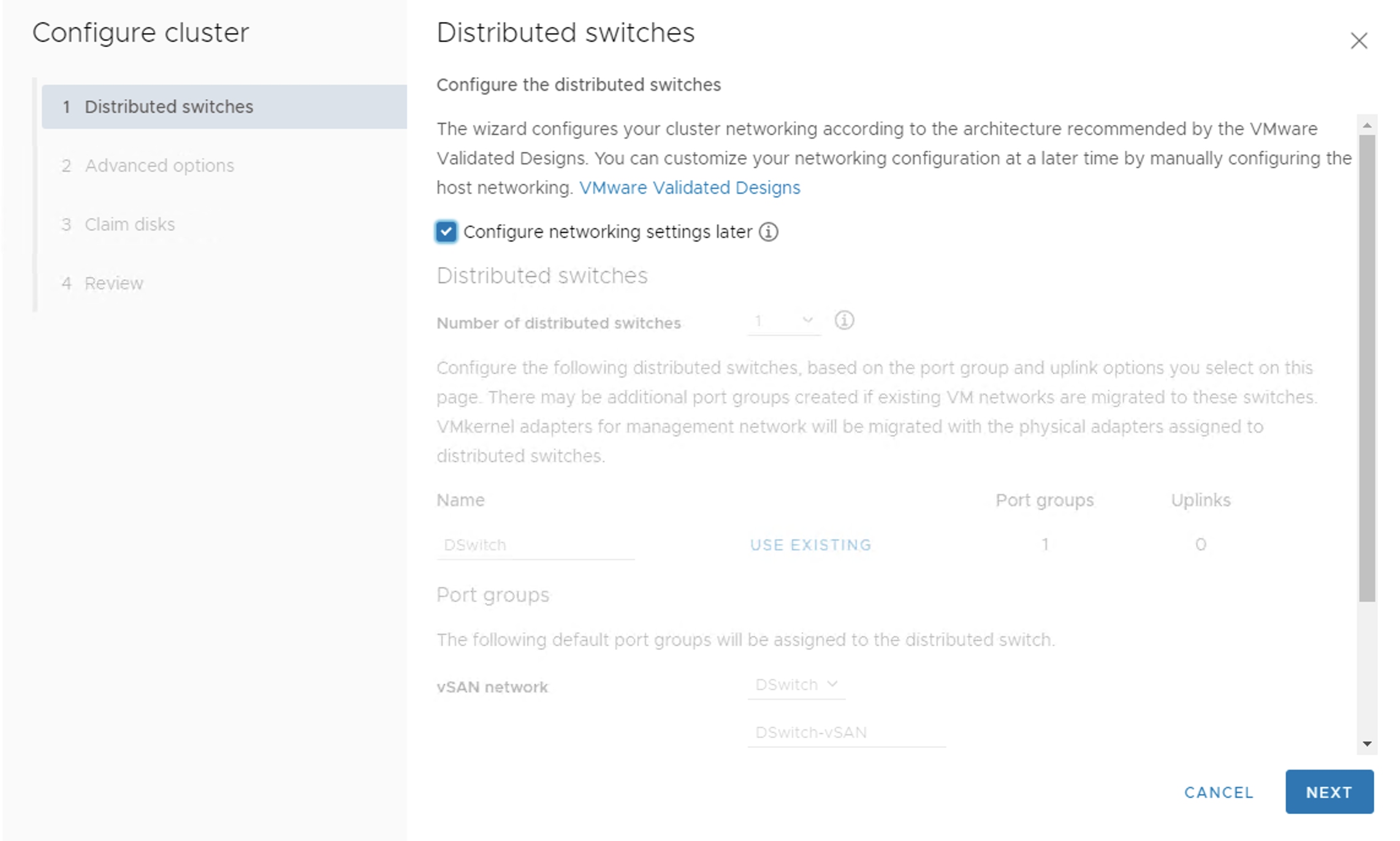

I already have a distributed virtual switch in use and will not be using the network configuration wizard.

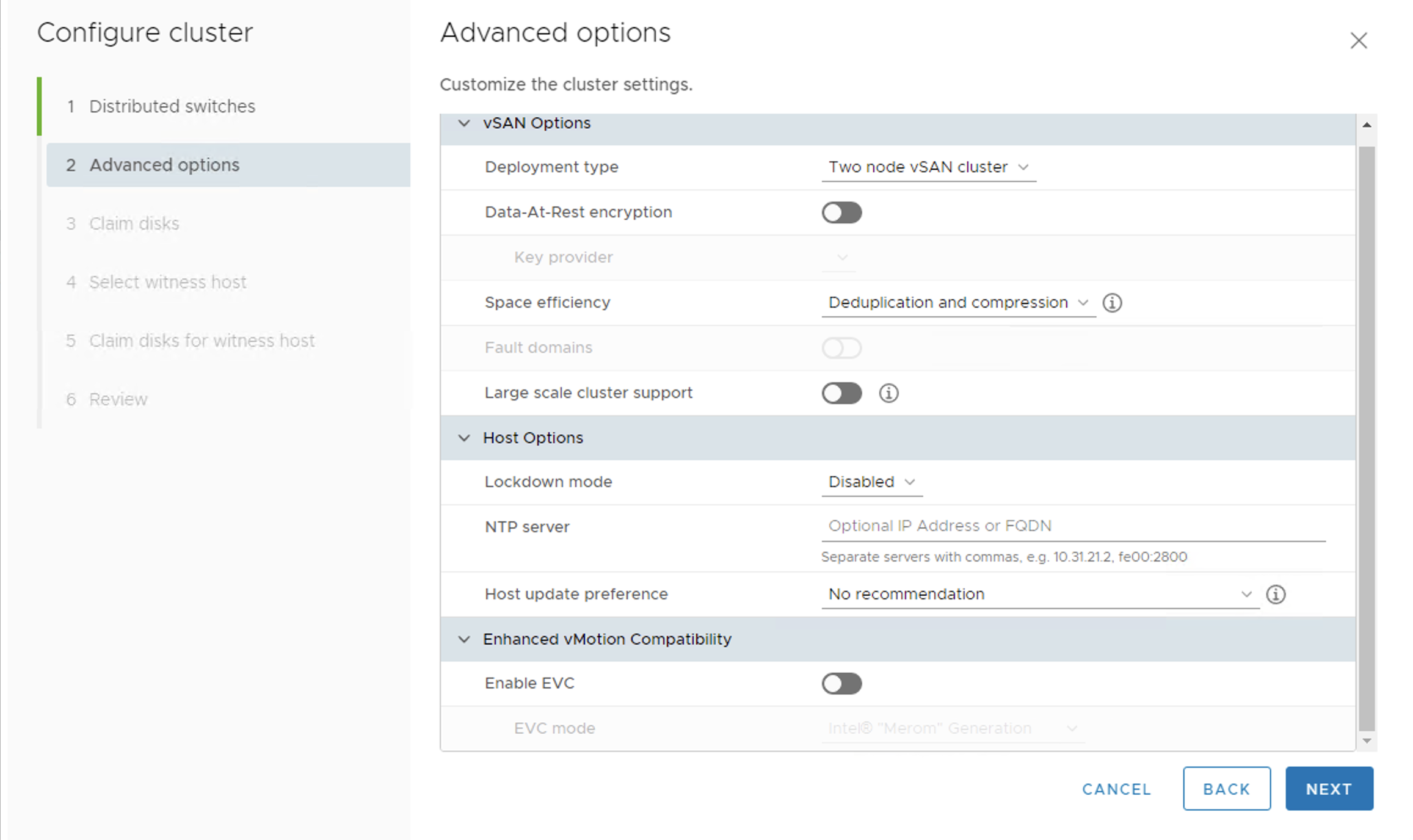

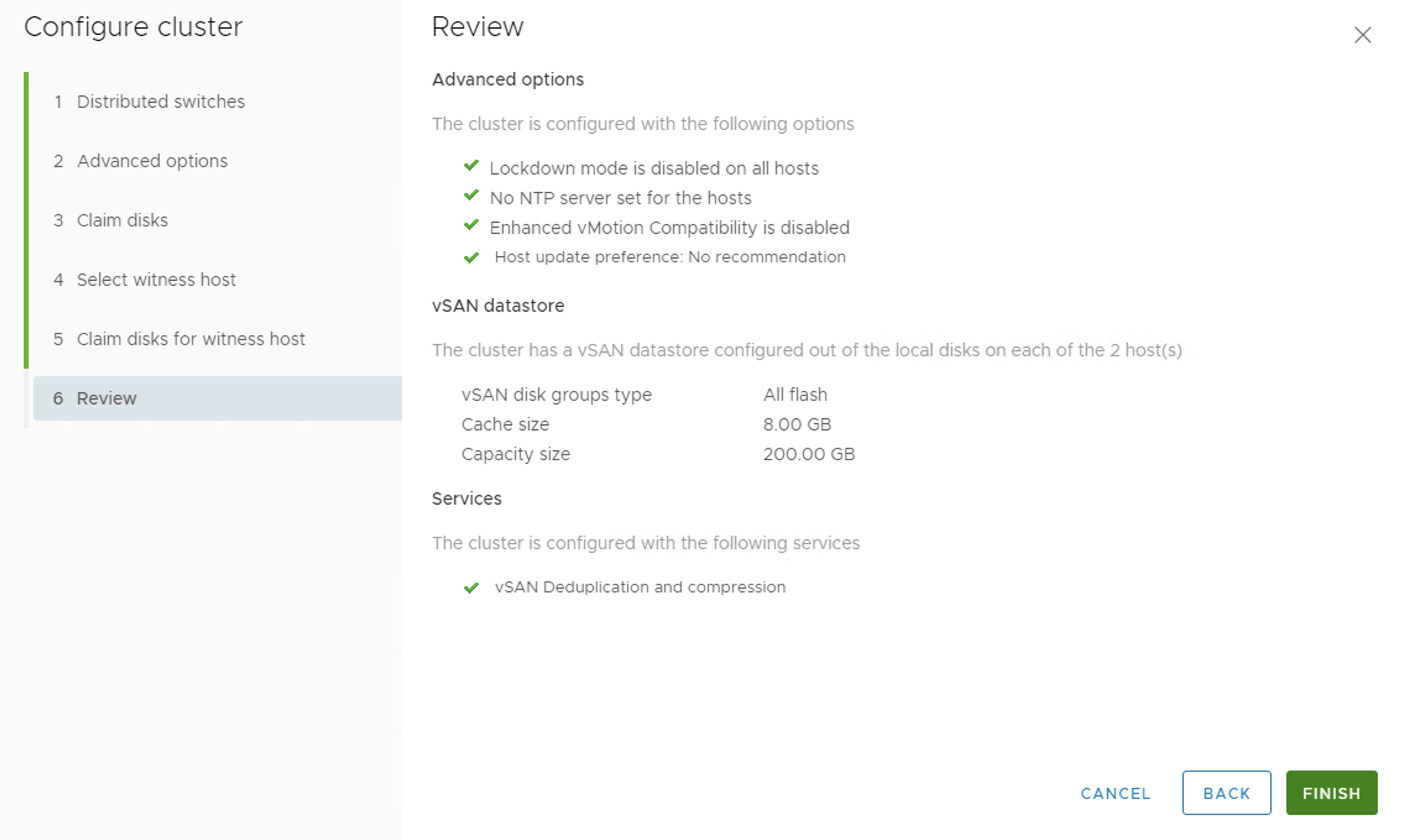

2 node option selected, de-dupe and compression enabled and choosing not to be notified at this time on host update preferences.

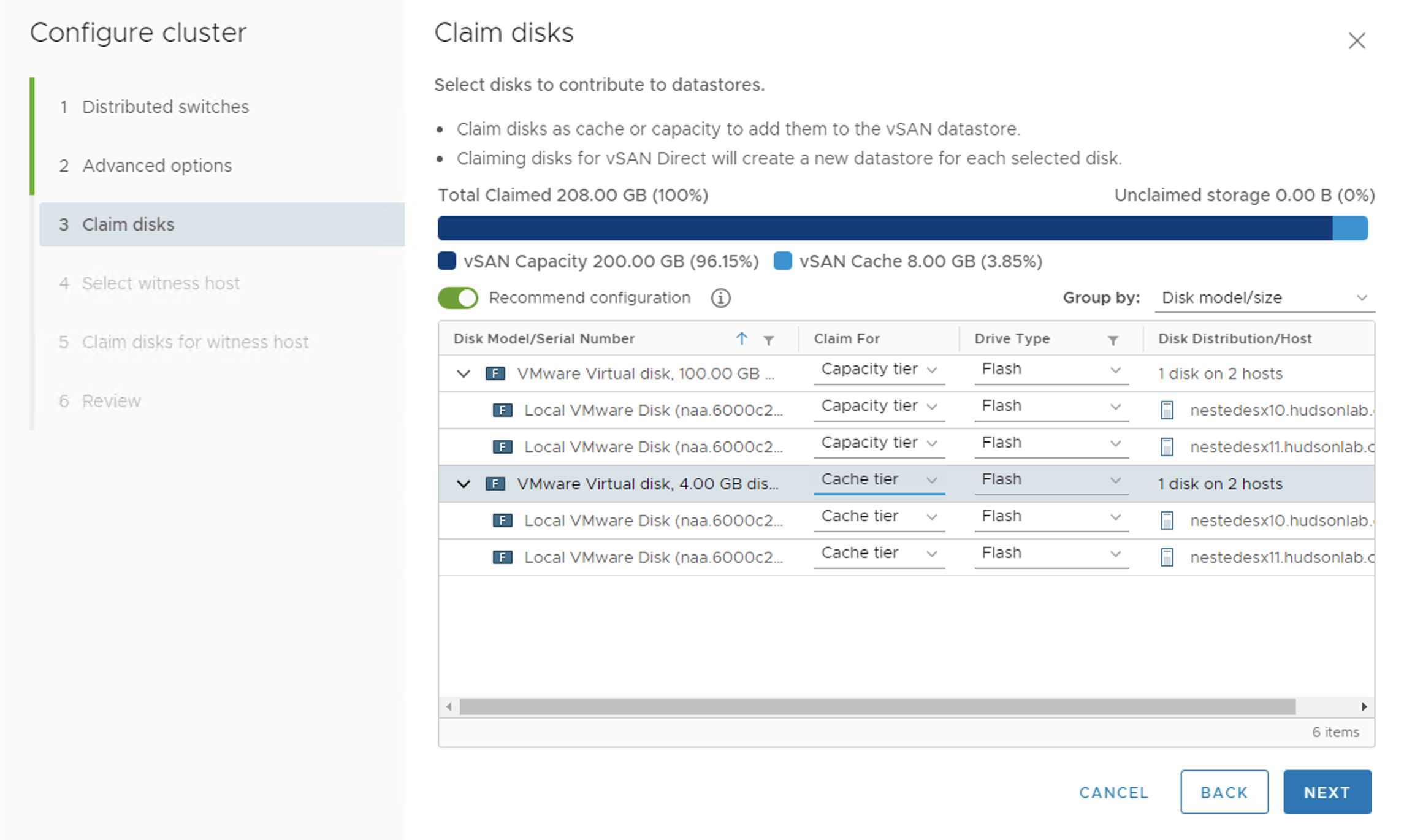

I’m choosing my 4GB drives as the caching tier and my 100GB drives as the capacity tier.

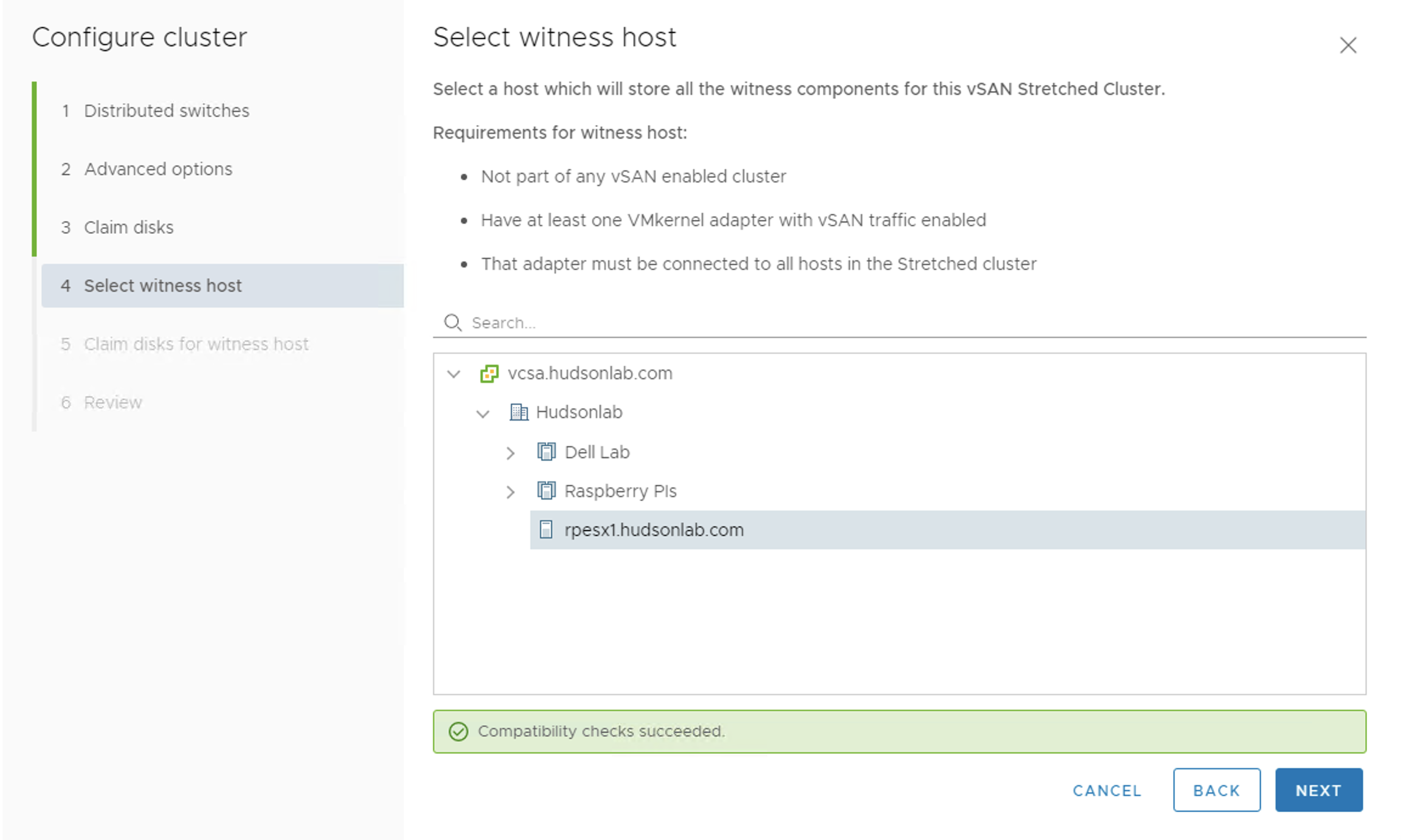

Now this time around I’m selecting the vSphere host running the VMware ESXiOnArm fling on my raspberry pi4 as the witness for the 2-node vSAN cluster.

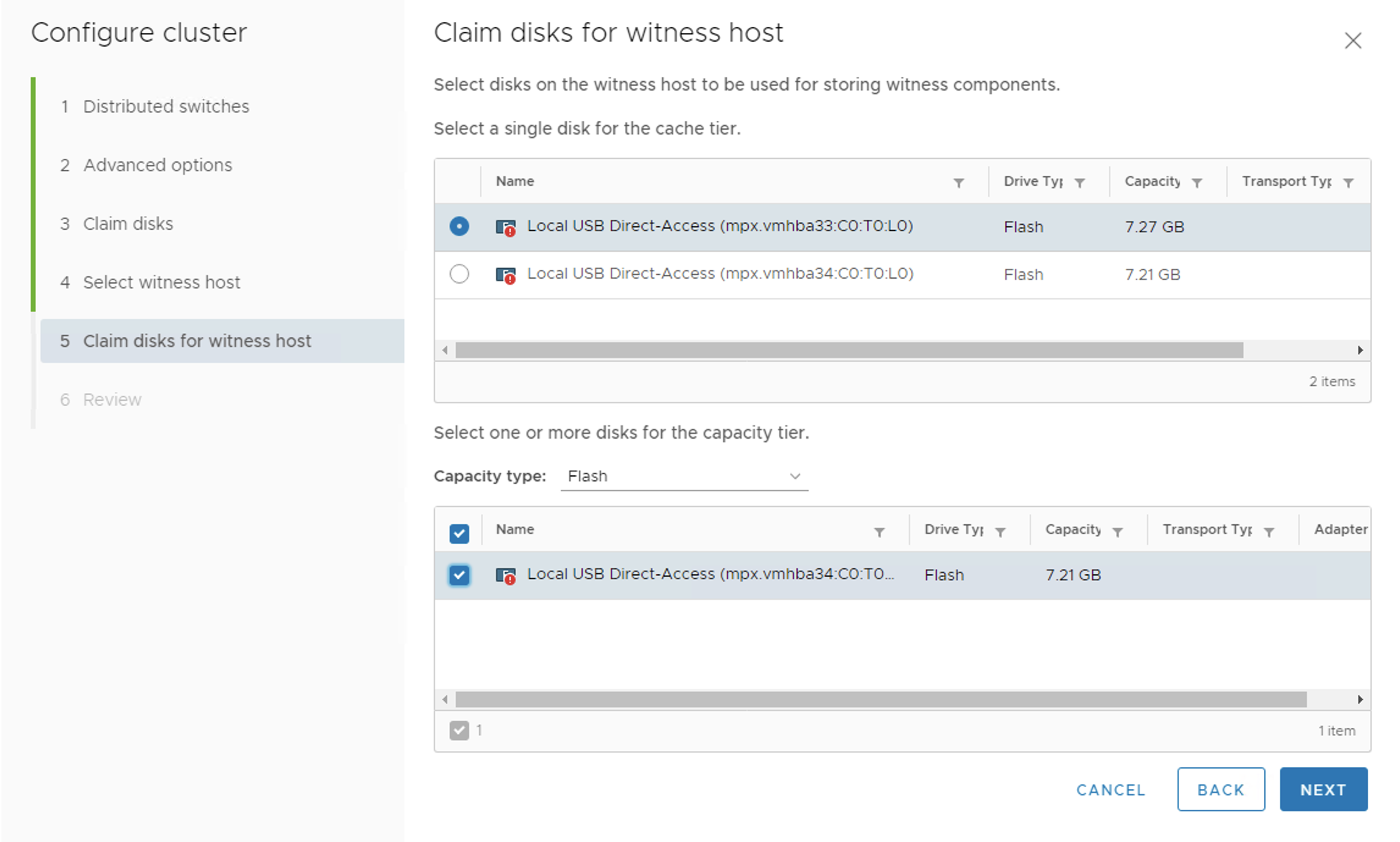

Then selecting the caching drive and capacity drive on the PI host.

Once completed, I have a summary page on how the cluster will be configured. All the hosts are already configured with NTP settings.

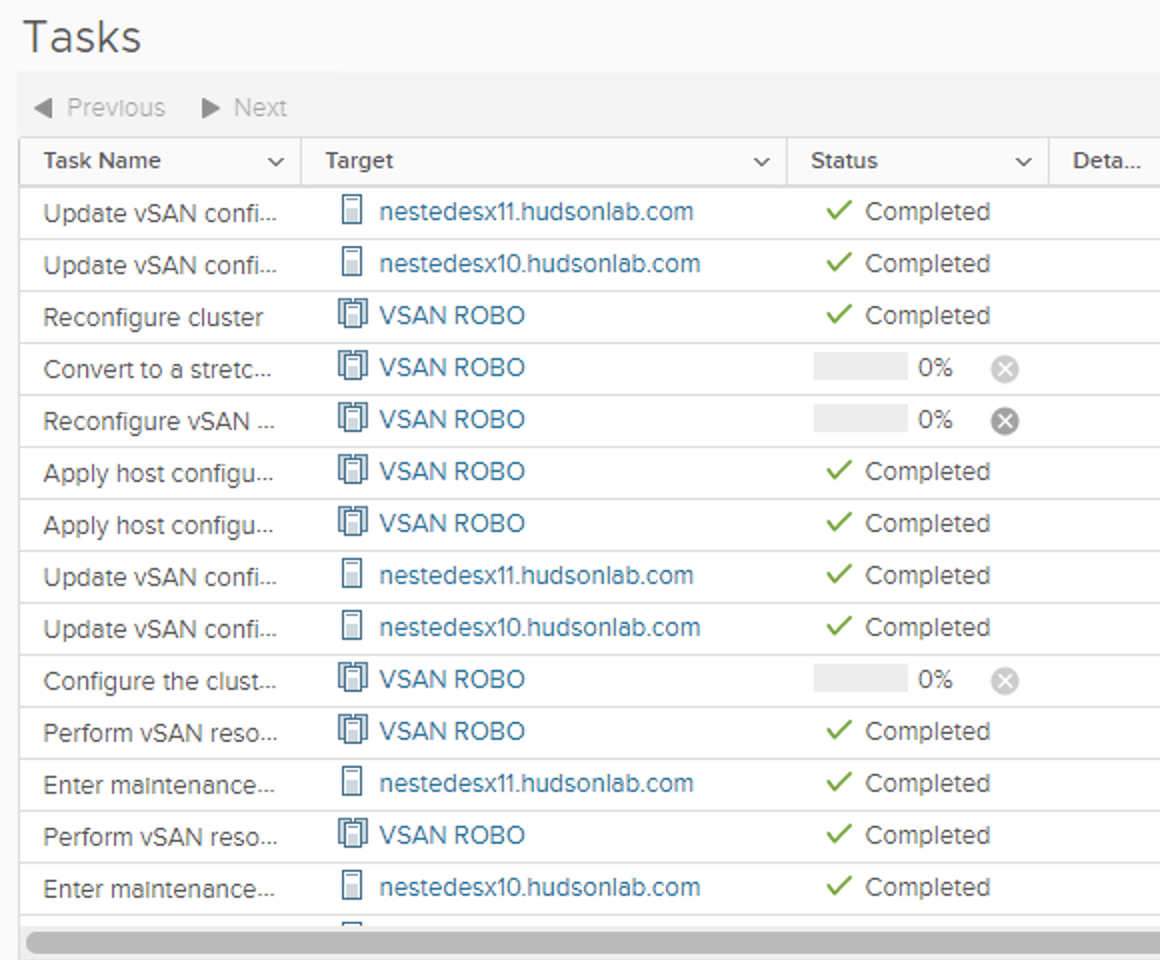

Under the cluster tasks view, you can see the progress as it converts it to a stretched cluster using the remote witness and creates the vSAN datastore.

Once completed, I now have a 2-node vSAN cluster with 200GB of storage available as a datastore to use.

Now time to test a VM deployment or two but I’ll need to turn on DRS and Availability to really take advantage of the benefits of a resilient vSphere vSAN cluster that could be used for a remote edge use case. Hopefully you found this valuable.

1 Response

[…] Use a RP4 as a remote witness for a 2 or more node vSAN cluster (blog here) […]